Introduction

Building a private AKS cluster offers enhanced network security and complete control over ingress and egress traffic.

This tutorial will guide you through the setup of a private AKS cluster with advanced networking capabilities, integrating it into a sophisticated network architecture using Pulumi.

By the end of this guide, you’ll know how to integrate the AKS cluster with a Hub VNet and apply firewall policies established in the previous az-02-hub-vnet project.

Table of Contents

Open Table of Contents

The project modules

The ContainerRegistry.ts Module

To enhance security and ensure that all Docker images deployed to our AKS cluster are verified, this module establishes a private Container Registry. By restricting AKS to pull images exclusively from this private registry, we eliminate the need to open firewalls on the public internet.

View code:

import * as azure from '@pulumi/azure-native';

/**

* Creates an Azure Container Registry (ACR).

*/

export default (

name: string,

{

rsGroup,

sku = azure.containerregistry.SkuName.Basic,

}: {

rsGroup: azure.resources.ResourceGroup;

sku?: azure.containerregistry.SkuName;

}

) =>

//The registry name is only allowed '^[a-zA-Z0-9]*$' so we will remove all dashes from the name.

new azure.containerregistry.Registry(

name.replace(/-/g, ''),

{

resourceGroupName: rsGroup.name,

sku: { name: sku },

//Enforce using EntraID authentication

adminUserEnabled: false,

//The feature below only available in Premium tier

//encryption

//networkRuleSet,

//publicNetworkAccess and private link

},

{ dependsOn: rsGroup }

);

The AksFirewallRules.ts Module

This module sets up a FirewallPolicyRuleCollectionGroup with policies that enable controlled outbound communication for AKS nodes. The rules ensure that only necessary traffic is permitted, thus enhancing the security posture of our AKS cluster.

View code:

import { currentRegionCode } from '@az-commons';

import * as azure from '@pulumi/azure-native';

import * as network from '@pulumi/azure-native/network';

import * as inputs from '@pulumi/azure-native/types/input';

import * as pulumi from '@pulumi/pulumi';

import { subnetSpaces } from '../config';

const netRules: pulumi.Input<inputs.network.NetworkRuleArgs>[] = [

// Network Rule for AKS

{

ruleType: 'NetworkRule',

name: 'azure-net-services-tags',

description: 'Allows internal services to connect to Azure Resources.',

ipProtocols: ['TCP'],

sourceAddresses: [subnetSpaces.aks],

destinationAddresses: [

'MicrosoftContainerRegistry',

'AzureMonitor',

'AzureBackup',

'AzureKeyVault',

'AzureContainerRegistry',

'Storage',

'AzureActiveDirectory',

],

destinationPorts: ['443'],

},

{

ruleType: 'NetworkRule',

name: 'aks-net-allows-commons-dns',

description: 'Others DNS.',

ipProtocols: ['TCP', 'UDP'],

//This rule will allow the entire network.

sourceAddresses: ['*'],

destinationAddresses: [

//Azure

'168.63.129.16',

//CloudFlare

'1.1.1.1',

'1.0.0.1',

//Google

'8.8.8.8',

'8.8.4.4',

],

destinationPorts: ['53'],

},

{

ruleType: 'NetworkRule',

name: 'aks-net-allows-cf-tunnel',

description: 'Allows Cloudflare Tunnel',

ipProtocols: ['TCP', 'UDP'],

sourceAddresses: [subnetSpaces.aks],

destinationAddresses: [

'198.41.192.167',

'198.41.192.67',

'198.41.192.57',

'198.41.192.107',

'198.41.192.27',

'198.41.192.7',

'198.41.192.227',

'198.41.192.47',

'198.41.192.37',

'198.41.192.77',

'198.41.200.13',

'198.41.200.193',

'198.41.200.33',

'198.41.200.233',

'198.41.200.53',

'198.41.200.63',

'198.41.200.113',

'198.41.200.73',

'198.41.200.43',

'198.41.200.23',

],

destinationPorts: ['7844'],

},

{

ruleType: 'NetworkRule',

name: 'aks-net-allows-to-devops',

description: 'Allows AKS to access DevOps',

ipProtocols: ['TCP', 'UDP'],

sourceAddresses: [subnetSpaces.aks],

destinationAddresses: [subnetSpaces.devOps],

destinationPorts: ['80', '443', '22'],

},

];

const appRules: pulumi.Input<inputs.network.ApplicationRuleArgs>[] = [

// Application Rule for AKS

{

ruleType: 'ApplicationRule',

name: 'aks-fqdn',

description: 'Azure Global required FQDN',

sourceAddresses: [subnetSpaces.aks],

targetFqdns: [

// Target FQDNs

`*.hcp.${currentRegionCode}.azmk8s.io`,

'mcr.microsoft.com',

'*.data.mcr.microsoft.com',

'mcr-0001.mcr-msedge.net',

'management.azure.com',

'login.microsoftonline.com',

'packages.microsoft.com',

'acs-mirror.azureedge.net',

//allows lets-encrypt

'acme-v02.api.letsencrypt.org',

//allows cloudflare api

'api.cloudflare.com',

],

protocols: [{ protocolType: 'Https', port: 443 }],

},

{

ruleType: 'ApplicationRule',

name: `aks-app-allow-cloudflare`,

description: 'Allows CF Tunnel to access to Cloudflare.',

sourceAddresses: [subnetSpaces.aks],

targetFqdns: [

'*.argotunnel.com',

'*.cftunnel.com',

'*.cloudflareaccess.com',

'*.cloudflareresearch.com',

],

protocols: [

{ protocolType: 'Https', port: 443 },

{ protocolType: 'Https', port: 7844 },

],

},

];

export default (

name: string,

{

acr,

rootPolicy,

}: {

acr: azure.containerregistry.Registry;

//This FirewallPolicyRuleCollectionGroup need to be linked to the Root Policy that had been created in `az-02-hub-vnet`

rootPolicy: {

name: pulumi.Input<string>;

resourceGroupName: pulumi.Input<string>;

};

}

) =>

new network.FirewallPolicyRuleCollectionGroup(

name,

{

resourceGroupName: rootPolicy.resourceGroupName,

firewallPolicyName: rootPolicy.name,

priority: 300,

ruleCollections: [

{

name: 'net-rules-collection',

priority: 300,

ruleCollectionType: 'FirewallPolicyFilterRuleCollection',

action: {

type: network

.FirewallPolicyFilterRuleCollectionActionType.Allow,

},

rules: netRules,

},

{

name: 'app-rules-collection',

priority: 301,

ruleCollectionType: 'FirewallPolicyFilterRuleCollection',

action: {

type: network

.FirewallPolicyFilterRuleCollectionActionType.Allow,

},

rules: [

...appRules,

{

ruleType: 'ApplicationRule',

name: 'aks-allows-pull-arc',

description:

'Only allows AKS to pull image from private ACR',

sourceAddresses: [subnetSpaces.aks],

targetFqdns: [

pulumi.interpolate`${acr.name}.azurecr.io`,

],

protocols: [{ protocolType: 'Https', port: 443 }],

},

],

},

],

},

{ dependsOn: acr }

);

The VNet.ts Module

The Virtual Network (VNet) serves as the backbone for our AKS cluster. It provides the primary network environment that includes subnets dedicated to AKS nodes. The VNet is peered with the Hub VNet to enable seamless integration with other services and to route all traffic through the Hub’s firewall, ensuring all egress traffic is controlled.

-

Security Group: By default, the VNet allows resources in all subnets to access the internet. To enhance security, a security group is created with the following default rules:

- Block all internet access from all subnets.

- Allow VNet-to-VNet communication to enable hub-spoke connectivity.

- Additional security rules can be added through parameters.

View code:

import * as network from '@pulumi/azure-native/network'; import * as resources from '@pulumi/azure-native/resources'; import * as inputs from '@pulumi/azure-native/types/input'; import * as pulumi from '@pulumi/pulumi'; /**By default, VNet allows to access to internet so we will create an NetworkSecurityGroup to block it. * ONly allows Vnet to Vnet and Vnet to private Firewall address communication * */ const createSecurityGroup = ( name: string, { rsGroup, securityRules = [], }: { rsGroup: resources.ResourceGroup; securityRules?: pulumi.Input<inputs.network.SecurityRuleArgs>[]; } ) => new network.NetworkSecurityGroup( name, { resourceGroupName: rsGroup.name, securityRules: [ ...securityRules, //default rules to block internet access and only allows internal vnet access { name: `${name}-allows-vnet-outbound`, description: 'Allows Vnet to Vnet Outbound', priority: 4095, protocol: '*', access: 'Allow', direction: 'Outbound', sourceAddressPrefix: 'VirtualNetwork', sourcePortRange: '*', destinationAddressPrefix: 'VirtualNetwork', destinationPortRange: '*', }, //Block direct access to internet { name: `${name}-block-internet-outbound`, description: 'Block Internet Outbound', priority: 4096, protocol: '*', access: 'Deny', direction: 'Outbound', sourceAddressPrefix: '*', sourcePortRange: '*', destinationAddressPrefix: 'Internet', destinationPortRange: '*', }, ], }, { dependsOn: rsGroup } ); /** As this Vnet is peering with hub vnet so the route '0.0.0.0/0' to private firewall ip address is needed. * */ const createRouteTable = ( name: string, { rsGroup, routes, }: { rsGroup: resources.ResourceGroup; routes: pulumi.Input<inputs.network.RouteArgs>[]; } ) => new network.RouteTable( name, { resourceGroupName: rsGroup.name, routes, }, { dependsOn: rsGroup } ); export default ( name: string, { rsGroup, subnets, routes, peeringVnet, securityRules, }: { rsGroup: resources.ResourceGroup; subnets: inputs.network.SubnetArgs[]; /**The optional additional rules for NetworkSecurityGroup*/ securityRules?: pulumi.Input<inputs.network.SecurityRuleArgs>[]; /**The optional of routing rules for RouteTable*/ routes?: pulumi.Input<inputs.network.RouteArgs>[]; peeringVnet?: { name: pulumi.Input<string>; id: pulumi.Input<string>; resourceGroupName: pulumi.Input<string>; }; } ) => { const sgroup = createSecurityGroup(name, { rsGroup, securityRules }); const routeTable = routes ? createRouteTable(name, { rsGroup, routes }) : undefined; const vnetName = name; const vnet = new network.VirtualNetwork( vnetName, { // Resource group name resourceGroupName: rsGroup.name, //Enable VN protection enableVmProtection: true, //Enable Vnet encryption encryption: { enabled: true, enforcement: network.VirtualNetworkEncryptionEnforcement .AllowUnencrypted, }, addressSpace: { addressPrefixes: subnets.map((s) => s.addressPrefix!), }, subnets: subnets.map((s) => ({ ...s, //Inject NetworkSecurityGroup to all subnets if available networkSecurityGroup: sgroup ? { id: sgroup.id } : undefined, //Inject RouteTable to all subnets if available routeTable: routeTable ? { id: routeTable.id } : undefined, })), }, // Ensure the virtual network dependency { dependsOn: routeTable ? [sgroup, routeTable] : sgroup, //Ignore this property as the peering will be manage by instance of VirtualNetworkPeering ignoreChanges: ['virtualNetworkPeerings'], } ); // Create sync Peering from `az-02-hub-vnet` to `az-03-aks-cluster` // The peering need to be happened 2 ways in order to establish the connection if (peeringVnet) { //from `az-02-hub-vnet` to `az-03-aks-cluster` new network.VirtualNetworkPeering( `hun-to-${vnetName}`, { resourceGroupName: peeringVnet.resourceGroupName, virtualNetworkName: peeringVnet.name, allowVirtualNetworkAccess: true, allowForwardedTraffic: true, syncRemoteAddressSpace: 'true', remoteVirtualNetwork: { id: vnet.id }, peeringSyncLevel: 'FullyInSync', }, { dependsOn: vnet } ); //from `az-03-aks-cluster` to `az-02-hub-vnet` new network.VirtualNetworkPeering( `${vnetName}-to-hub`, { resourceGroupName: rsGroup.name, virtualNetworkName: vnet.name, allowVirtualNetworkAccess: true, allowForwardedTraffic: true, syncRemoteAddressSpace: 'true', remoteVirtualNetwork: { id: peeringVnet.id }, peeringSyncLevel: 'FullyInSync', }, { dependsOn: vnet } ); } return vnet; }; -

Route Table: This VNet will peer with the hub, necessitating a route table to direct all traffic to the private IP address of the firewall.

View code:

import * as network from '@pulumi/azure-native/network'; import * as resources from '@pulumi/azure-native/resources'; import * as inputs from '@pulumi/azure-native/types/input'; import * as pulumi from '@pulumi/pulumi'; /**By default, VNet allows to access to internet so we will create an NetworkSecurityGroup to block it. * ONly allows Vnet to Vnet and Vnet to private Firewall address communication * */ const createSecurityGroup = ( name: string, { rsGroup, securityRules = [], }: { rsGroup: resources.ResourceGroup; securityRules?: pulumi.Input<inputs.network.SecurityRuleArgs>[]; } ) => new network.NetworkSecurityGroup( name, { resourceGroupName: rsGroup.name, securityRules: [ ...securityRules, //default rules to block internet access and only allows internal vnet access { name: `${name}-allows-vnet-outbound`, description: 'Allows Vnet to Vnet Outbound', priority: 4095, protocol: '*', access: 'Allow', direction: 'Outbound', sourceAddressPrefix: 'VirtualNetwork', sourcePortRange: '*', destinationAddressPrefix: 'VirtualNetwork', destinationPortRange: '*', }, //Block direct access to internet { name: `${name}-block-internet-outbound`, description: 'Block Internet Outbound', priority: 4096, protocol: '*', access: 'Deny', direction: 'Outbound', sourceAddressPrefix: '*', sourcePortRange: '*', destinationAddressPrefix: 'Internet', destinationPortRange: '*', }, ], }, { dependsOn: rsGroup } ); /** As this Vnet is peering with hub vnet so the route '0.0.0.0/0' to private firewall ip address is needed. * */ const createRouteTable = ( name: string, { rsGroup, routes, }: { rsGroup: resources.ResourceGroup; routes: pulumi.Input<inputs.network.RouteArgs>[]; } ) => new network.RouteTable( name, { resourceGroupName: rsGroup.name, routes, }, { dependsOn: rsGroup } ); export default ( name: string, { rsGroup, subnets, routes, peeringVnet, securityRules, }: { rsGroup: resources.ResourceGroup; subnets: inputs.network.SubnetArgs[]; /**The optional additional rules for NetworkSecurityGroup*/ securityRules?: pulumi.Input<inputs.network.SecurityRuleArgs>[]; /**The optional of routing rules for RouteTable*/ routes?: pulumi.Input<inputs.network.RouteArgs>[]; peeringVnet?: { name: pulumi.Input<string>; id: pulumi.Input<string>; resourceGroupName: pulumi.Input<string>; }; } ) => { const sgroup = createSecurityGroup(name, { rsGroup, securityRules }); const routeTable = routes ? createRouteTable(name, { rsGroup, routes }) : undefined; const vnetName = name; const vnet = new network.VirtualNetwork( vnetName, { // Resource group name resourceGroupName: rsGroup.name, //Enable VN protection enableVmProtection: true, //Enable Vnet encryption encryption: { enabled: true, enforcement: network.VirtualNetworkEncryptionEnforcement .AllowUnencrypted, }, addressSpace: { addressPrefixes: subnets.map((s) => s.addressPrefix!), }, subnets: subnets.map((s) => ({ ...s, //Inject NetworkSecurityGroup to all subnets if available networkSecurityGroup: sgroup ? { id: sgroup.id } : undefined, //Inject RouteTable to all subnets if available routeTable: routeTable ? { id: routeTable.id } : undefined, })), }, // Ensure the virtual network dependency { dependsOn: routeTable ? [sgroup, routeTable] : sgroup, //Ignore this property as the peering will be manage by instance of VirtualNetworkPeering ignoreChanges: ['virtualNetworkPeerings'], } ); // Create sync Peering from `az-02-hub-vnet` to `az-03-aks-cluster` // The peering need to be happened 2 ways in order to establish the connection if (peeringVnet) { //from `az-02-hub-vnet` to `az-03-aks-cluster` new network.VirtualNetworkPeering( `hun-to-${vnetName}`, { resourceGroupName: peeringVnet.resourceGroupName, virtualNetworkName: peeringVnet.name, allowVirtualNetworkAccess: true, allowForwardedTraffic: true, syncRemoteAddressSpace: 'true', remoteVirtualNetwork: { id: vnet.id }, peeringSyncLevel: 'FullyInSync', }, { dependsOn: vnet } ); //from `az-03-aks-cluster` to `az-02-hub-vnet` new network.VirtualNetworkPeering( `${vnetName}-to-hub`, { resourceGroupName: rsGroup.name, virtualNetworkName: vnet.name, allowVirtualNetworkAccess: true, allowForwardedTraffic: true, syncRemoteAddressSpace: 'true', remoteVirtualNetwork: { id: peeringVnet.id }, peeringSyncLevel: 'FullyInSync', }, { dependsOn: vnet } ); } return vnet; }; -

VNet: Finally, the VNet is configured to create the route table and security group, injecting them into all provided subnets. Additionally, it establishes VNet peering with the hub VNet.

View code:

name: string, { rsGroup, securityRules = [], }: { rsGroup: resources.ResourceGroup; securityRules?: pulumi.Input<inputs.network.SecurityRuleArgs>[]; } ) => new network.NetworkSecurityGroup( name, { resourceGroupName: rsGroup.name, securityRules: [ ...securityRules, //default rules to block internet access and only allows internal vnet access { name: `${name}-allows-vnet-outbound`, description: 'Allows Vnet to Vnet Outbound', priority: 4095, protocol: '*', access: 'Allow', direction: 'Outbound', sourceAddressPrefix: 'VirtualNetwork', sourcePortRange: '*', destinationAddressPrefix: 'VirtualNetwork', destinationPortRange: '*', }, //Block direct access to internet { name: `${name}-block-internet-outbound`, description: 'Block Internet Outbound', priority: 4096, protocol: '*', access: 'Deny', direction: 'Outbound', sourceAddressPrefix: '*', sourcePortRange: '*', destinationAddressPrefix: 'Internet', destinationPortRange: '*', }, ], }, { dependsOn: rsGroup } ); /** As this Vnet is peering with hub vnet so the route '0.0.0.0/0' to private firewall ip address is needed. * */ const createRouteTable = ( name: string, { rsGroup, routes, }: { rsGroup: resources.ResourceGroup; routes: pulumi.Input<inputs.network.RouteArgs>[]; } ) => new network.RouteTable( name, { resourceGroupName: rsGroup.name, routes, }, { dependsOn: rsGroup } ); export default ( name: string, { rsGroup, subnets, routes, peeringVnet, securityRules, }: { rsGroup: resources.ResourceGroup; subnets: inputs.network.SubnetArgs[]; /**The optional additional rules for NetworkSecurityGroup*/ securityRules?: pulumi.Input<inputs.network.SecurityRuleArgs>[]; /**The optional of routing rules for RouteTable*/ routes?: pulumi.Input<inputs.network.RouteArgs>[]; peeringVnet?: { name: pulumi.Input<string>; id: pulumi.Input<string>; resourceGroupName: pulumi.Input<string>; }; } ) => { const sgroup = createSecurityGroup(name, { rsGroup, securityRules }); const routeTable = routes ? createRouteTable(name, { rsGroup, routes }) : undefined; const vnetName = name; const vnet = new network.VirtualNetwork( vnetName, { // Resource group name resourceGroupName: rsGroup.name, //Enable VN protection enableVmProtection: true, //Enable Vnet encryption encryption: { enabled: true, enforcement: network.VirtualNetworkEncryptionEnforcement .AllowUnencrypted, }, addressSpace: { addressPrefixes: subnets.map((s) => s.addressPrefix!), }, subnets: subnets.map((s) => ({ ...s, //Inject NetworkSecurityGroup to all subnets if available networkSecurityGroup: sgroup ? { id: sgroup.id } : undefined, //Inject RouteTable to all subnets if available routeTable: routeTable ? { id: routeTable.id } : undefined, })), }, // Ensure the virtual network dependency { dependsOn: routeTable ? [sgroup, routeTable] : sgroup, //Ignore this property as the peering will be manage by instance of VirtualNetworkPeering ignoreChanges: ['virtualNetworkPeerings'], } ); // Create sync Peering from `az-02-hub-vnet` to `az-03-aks-cluster` // The peering need to be happened 2 ways in order to establish the connection if (peeringVnet) { //from `az-02-hub-vnet` to `az-03-aks-cluster` new network.VirtualNetworkPeering( `hun-to-${vnetName}`, { resourceGroupName: peeringVnet.resourceGroupName, virtualNetworkName: peeringVnet.name, allowVirtualNetworkAccess: true, allowForwardedTraffic: true, syncRemoteAddressSpace: 'true', remoteVirtualNetwork: { id: vnet.id }, peeringSyncLevel: 'FullyInSync', }, { dependsOn: vnet } ); //from `az-03-aks-cluster` to `az-02-hub-vnet` new network.VirtualNetworkPeering( `${vnetName}-to-hub`, { resourceGroupName: rsGroup.name, virtualNetworkName: vnet.name, allowVirtualNetworkAccess: true, allowForwardedTraffic: true, syncRemoteAddressSpace: 'true', remoteVirtualNetwork: { id: peeringVnet.id }, peeringSyncLevel: 'FullyInSync', }, { dependsOn: vnet } ); } return vnet; };

The AKS.ts Module

-

SSH Key Generation Custom Resource: An SSH key is required for configuring an AKS cluster.

Due to Pulumi’s lack of native SSH support, I use Dynamic Resource Providers to create a custom component that dynamically generates an SSH key at runtime.

View SSH generator code:

import { generateKeyPair, RSAKeyPairOptions } from 'crypto'; import * as pulumi from '@pulumi/pulumi'; import * as forge from 'node-forge'; /** A core method to generate the Ssh keys using node-force */ const generateKeys = (options: RSAKeyPairOptions<'pem', 'pem'>) => new Promise<{ publicKey: string; privateKey: string }>( (resolve, reject) => { generateKeyPair( 'rsa', options, (err: Error | null, pK: string, prK: string) => { if (err) reject(err); const publicKey = forge.ssh.publicKeyToOpenSSH( forge.pki.publicKeyFromPem(pK) ); const privateKey = forge.ssh.privateKeyToOpenSSH( forge.pki.decryptRsaPrivateKey( prK, options.privateKeyEncoding.passphrase ) ); resolve({ publicKey, privateKey }); } ); } ); /** * There is no native Pulumi component to Ssh generator. * This class was implemented using an amazing feature of pulumi called `Dynamic Resource Provider`. * Refer here for detail: https://www.pulumi.com/docs/iac/concepts/resources/dynamic-providers/ * */ export type DeepInput<T> = T extends object ? { [K in keyof T]: DeepInput<T[K]> | pulumi.Input<T[K]> } : pulumi.Input<T>; interface SshKeyInputs { password: string; } interface SshKeyOutputs { password: string; privateKey: string; publicKey: string; } /** Ssh Resource Provider*/ class SshResourceProvider implements pulumi.dynamic.ResourceProvider { constructor(private name: string) {} /**Method will be executed when pulumi resource creating. * In here we just generate ssh with provided password and return the results as output of component*/ async create( inputs: SshKeyInputs ): Promise<pulumi.dynamic.CreateResult<SshKeyOutputs>> { const { publicKey, privateKey } = await generateKeys({ modulusLength: 4096, publicKeyEncoding: { type: 'spki', format: 'pem', }, privateKeyEncoding: { type: 'pkcs8', format: 'pem', cipher: 'aes-256-cbc', passphrase: inputs.password, }, }); return { id: this.name, outs: { password: inputs.password, publicKey, privateKey, }, }; } /** The method will be executed when pulumi resource is updating. * We do nothing here but just return the output that was created before*/ async update( id: string, old: SshKeyOutputs, news: SshKeyInputs ): Promise<pulumi.dynamic.UpdateResult<SshKeyOutputs>> { //no update needed return { outs: old }; } } /** The Ssh Generator Resource will use the provider above to generate and store the output into the pulumi project state. */ export class SshGenerator extends pulumi.dynamic.Resource { declare readonly name: string; declare readonly publicKey: pulumi.Output<string>; declare readonly privateKey: pulumi.Output<string>; declare readonly password: pulumi.Output<string>; constructor( name: string, args: DeepInput<SshKeyInputs>, opts?: pulumi.CustomResourceOptions ) { const innerOpts = pulumi.mergeOptions(opts, { //This is important to tell pulumi to encrypt these outputs in the state. The encrypting and decrypting will be handled bt pulumi automatically additionalSecretOutputs: ['publicKey', 'privateKey', 'password'], }); const innerInputs = { publicKey: undefined, privateKey: undefined, //This to tell pulumi that this input is a secret, and it will be encrypted in the state as well. password: pulumi.secret(args.password), }; super( new SshResourceProvider(name), `csp:SshGenerator:${name}`, innerInputs, innerOpts ); } } //Export the SshGenerator resource as default of the module export default SshGenerator;This component also demonstrates how to securely store secrets within the Pulumi state.

Furthermore, a helper method uses the SSH generator alongside a random password to create an SSH public and private key pair and stored them in Key Vault for AKS.

View code:

name: string, vaultInfo?: { resourceGroupName: pulumi.Input<string>; vaultName: pulumi.Input<string>; } ) => { const sshName = name; const ssh = new SshGenerator(sshName, { password: new random.RandomPassword(name, { length: 50 }).result, }); //Store a public key and private key to Vault if (vaultInfo) { [ { name: `${sshName}-publicKey`, value: ssh.publicKey }, { name: `${sshName}-privateKey`, value: ssh.privateKey }, { name: `${sshName}-password`, value: ssh.password }, ].map( (s) => new azure.keyvault.Secret( s.name, { ...vaultInfo, secretName: s.name, properties: { value: s.value, contentType: s.name, }, }, { dependsOn: ssh, retainOnDelete: true, } ) ); } return ssh; }; -

AKS Identity Creation: AKS can be configured to use Microsoft Entra ID for user authentication.

This setup allows users to sign in to an AKS cluster using a Microsoft Entra authentication to manage access to namespaces and cluster resources.

View code:

const createRBACIdentity = ( name: string, rsGroup: azure.resources.ResourceGroup ) => { //Create Entra Admin Group const adminGroup = new ad.Group(name, { displayName: `AZ ROL ${name.toUpperCase()}`, securityEnabled: true, }); //Create Entra App Registration const appRegistration = new ad.ApplicationRegistration(name, { description: name, displayName: name, signInAudience: 'AzureADMyOrg', }); //Add current principal as an owner of the app. new ad.ApplicationOwner( name, { applicationId: appRegistration.id, ownerObjectId: currentPrincipal }, { dependsOn: appRegistration, retainOnDelete: true } ); //Create App Client Secret const appSecret = new ad.ApplicationPassword( name, { applicationId: appRegistration.id }, { dependsOn: appRegistration } ); //Grant Azure permission to the Admin Group at the Resource Group level [ { name: 'Azure Kubernetes Service Cluster User Role', id: '4abbcc35-e782-43d8-92c5-2d3f1bd2253f', }, { name: 'Azure Kubernetes Service RBAC Reader', id: '7f6c6a51-bcf8-42ba-9220-52d62157d7db', }, { name: 'ReadOnly', id: 'acdd72a7-3385-48ef-bd42-f606fba81ae7' }, ].map( (r) => new azure.authorization.RoleAssignment( `${name}-${r.id}`, { principalType: 'Group', principalId: adminGroup.objectId, roleAssignmentName: r.id, roleDefinitionId: `/providers/Microsoft.Authorization/roleDefinitions/${r.id}`, scope: rsGroup.id, }, { dependsOn: rsGroup } ) ); //Return the results return { adminGroup, appRegistration, appSecret }; }; -

AKS Cluster Creation: Finally, by integrating all components, we establish our AKS cluster. The source code contains several key elements worth noting.

View code:

name: string, { rsGroup, vnet, acr, nodeAdminUserName, vaultInfo, logWorkspaceId, tier = azure.containerservice.ManagedClusterSKUTier.Free, osDiskSizeGB = 128, osDiskType = azure.containerservice.OSDiskType.Managed, vmSize = 'Standard_B2ms', }: { rsGroup: azure.resources.ResourceGroup; acr?: azure.containerregistry.Registry; vnet: azure.network.VirtualNetwork; vmSize?: pulumi.Input<string>; osDiskSizeGB?: pulumi.Input<number>; osDiskType?: azure.containerservice.OSDiskType; nodeAdminUserName: pulumi.Input<string>; tier?: azure.containerservice.ManagedClusterSKUTier; logWorkspaceId?: pulumi.Input<string>; vaultInfo?: { resourceGroupName: pulumi.Input<string>; vaultName: pulumi.Input<string>; }; } ) => { const aksIdentity = createRBACIdentity(name, rsGroup); const ssh = createSsh(name, vaultInfo); const aksName = name; const nodeResourceGroup = `${aksName}-nodes`; //Create AKS Cluster const aks = new azure.containerservice.ManagedCluster( aksName, { resourceGroupName: rsGroup.name, //The name of node resource group. //This group will be created and managed by AKS directly. nodeResourceGroup, dnsPrefix: aksName, //Disable public network access as this is a private cluster publicNetworkAccess: 'Disabled', apiServerAccessProfile: { //Not allows running command directly from azure portal disableRunCommand: true, //enable private cluster enablePrivateCluster: true, //Enable this to enable public DNS resolver to a private IP Address. //This is necessary for MDM accessing though a Cloudflare tunnel later enablePrivateClusterPublicFQDN: true, privateDNSZone: 'system', }, //Addon profile to enable and disable some built-in features addonProfiles: { //Enable the azure policy. We will discuss this feature in a separate topic. azurePolicy: { enabled: true }, //Enable container insights omsAgent: { enabled: Boolean(logWorkspaceId), config: logWorkspaceId ? { logAnalyticsWorkspaceResourceID: logWorkspaceId } : undefined, }, }, sku: { name: azure.containerservice.ManagedClusterSKUName.Base, tier, }, supportPlan: azure.containerservice.KubernetesSupportPlan.KubernetesOfficial, //The node pool profile: this will set up the subnetId, auto-scale and disk space setup agentPoolProfiles: [ { name: 'defaultnodes', mode: 'System', enableAutoScaling: true, count: 1, minCount: 1, maxCount: 3, vnetSubnetID: vnet.subnets.apply( (ss) => ss!.find((s) => s.name === 'aks')!.id! ), type: azure.containerservice.AgentPoolType .VirtualMachineScaleSets, maxPods: 50, enableFIPS: false, enableNodePublicIP: false, //az feature register --name EncryptionAtHost --namespace Microsoft.Compute enableEncryptionAtHost: true, //TODO: Enable this in PRD enableUltraSSD: false, osDiskSizeGB, osDiskType, vmSize, kubeletDiskType: 'OS', osSKU: 'Ubuntu', osType: 'Linux', }, ], //Linux authentication profile username and ssh key linuxProfile: { adminUsername: nodeAdminUserName, ssh: { publicKeys: [{ keyData: ssh.publicKey }] }, }, //service profile to set up EntraID identity. servicePrincipalProfile: { clientId: aksIdentity.appRegistration.clientId, secret: aksIdentity.appSecret.value, }, identity: { type: azure.containerservice.ResourceIdentityType .SystemAssigned, }, //Enable auto upgrade autoUpgradeProfile: { upgradeChannel: azure.containerservice.UpgradeChannel.Stable, }, //disable local account and only allows to authenticate using EntraID disableLocalAccounts: true, enableRBAC: true, aadProfile: { enableAzureRBAC: true, managed: true, adminGroupObjectIDs: [aksIdentity.adminGroup.objectId], tenantID: tenantId, }, //Storage profile //TODO: update this one depend on your env needs storageProfile: { blobCSIDriver: { enabled: true }, diskCSIDriver: { enabled: true }, fileCSIDriver: { enabled: true }, snapshotController: { enabled: false }, }, //Network profile, it is using Azure network with User define routing //This will use vnet route table to route all access to hub vnet networkProfile: { networkMode: azure.containerservice.NetworkMode.Transparent, networkPolicy: azure.containerservice.NetworkPolicy.Azure, networkPlugin: azure.containerservice.NetworkPlugin.Azure, outboundType: azure.containerservice.OutboundType.UserDefinedRouting, loadBalancerSku: 'Standard', }, }, { dependsOn: [ rsGroup, aksIdentity.appRegistration, aksIdentity.appSecret, ssh, vnet, ], } ); //Grant contribute permission to aks identity on the rsGroup //This is required to create some azure resources for ingress like IP Address or Load-balancer new azure.authorization.RoleAssignment( `${aksName}-contribute-rsGroup`, { principalType: 'ServicePrincipal', principalId: aks.identity.apply((i) => i!.principalId!), roleAssignmentName: 'b24988ac-6180-42a0-ab88-20f7382dd24c', roleDefinitionId: '/providers/Microsoft.Authorization/roleDefinitions/b24988ac-6180-42a0-ab88-20f7382dd24c', scope: rsGroup.id, }, { dependsOn: [rsGroup, aks] } ); //If ACR is provided, then grant permission to allow AKS to download image from ACR if (acr) { new azure.authorization.RoleAssignment( `${aksName}-arc-pull`, { description: `${aksName} arc pull permission`, scope: acr.id, principalType: 'ServicePrincipal', principalId: aks.identityProfile.apply( (i) => i!.kubeletidentity.objectId! ), //This the ID of ARCPull roleAssignmentName: '7f951dda-4ed3-4680-a7ca-43fe172d538d', roleDefinitionId: '/providers/Microsoft.Authorization/roleDefinitions/7f951dda-4ed3-4680-a7ca-43fe172d538d', }, { dependsOn: [aks, acr] } ); } return aks; };

Developing a Private AKS Cluster

Our goal is to configure all necessary elements for the AKS Cluster, which include:

- Resource Group: A container for organizing related Azure resources, simplifying management and cost tracking.

- Container Registry: The main repository for all Docker images used by our private AKS, ensuring secure image deployment.

- AKS Firewall Policy: To enable outbound internet connectivity, we must configure firewall rules that allow AKS nodes to communicate securely with essential Azure services.

- Virtual Network (VNet): The primary network hosting our AKS subnets, integrated with our Hub VNet to ensure secure and managed traffic flow.

- AKS Cluster: An Azure-managed Kubernetes service, configured with advanced security and connectivity options.

View code:

import { StackReference } from '@az-commons';

import * as resources from '@pulumi/azure-native/resources';

import * as config from '../config';

import Aks from './Aks';

import FirewallRule from './AksFirewallRules';

import ContainerRegistry from './ContainerRegistry';

import VNet from './VNet';

//Reference to the output of `az-01-shared` and `az-02-hub-vnet`.

const sharedStack = StackReference<config.SharedStackOutput>('az-01-shared');

const hubVnetStack = StackReference<config.HubVnetOutput>('az-02-hub-vnet');

// Create Vnet

const rsGroup = new resources.ResourceGroup(config.azGroups.aks);

//Create Private Container Registry to AKS

const acr = ContainerRegistry(config.azGroups.aks, { rsGroup });

//Apply AKS Firewall Rules this will be a new AKS Firewall Group links to the Hub Firewall Policy created in `az-02-hub`

FirewallRule(config.azGroups.aks, {

acr,

rootPolicy: {

resourceGroupName: hubVnetStack.rsGroup.name,

name: hubVnetStack.firewallPolicy.name,

},

});

// Create Virtual Network with Subnets

const vnet = VNet(config.azGroups.aks, {

rsGroup,

subnets: [

{

name: 'aks',

addressPrefix: config.subnetSpaces.aks,

},

],

//allows vnet to firewall's private IP

securityRules: [

{

name: `allows-vnet-to-hub-firewall`,

description: 'Allows Vnet to hub firewall Outbound',

priority: 300,

protocol: '*',

access: 'Allow',

direction: 'Outbound',

sourceAddressPrefix: hubVnetStack.firewall.address.apply(

(ip) => `${ip}/32`

),

sourcePortRange: '*',

destinationAddressPrefix: 'VirtualNetwork',

destinationPortRange: '*',

},

],

//route all requests to firewall's private IP

routes: [

{

name: 'route-vnet-to-firewall',

addressPrefix: '0.0.0.0/0',

nextHopIpAddress: hubVnetStack.firewall.address,

nextHopType: 'VirtualAppliance',

},

],

//peering to hub vnet

peeringVnet: {

name: hubVnetStack.hubVnet.name,

id: hubVnetStack.hubVnet.id,

resourceGroupName: hubVnetStack.rsGroup.name,

},

});

//Create AKS cluster

const aks = Aks(config.azGroups.aks, {

rsGroup,

nodeAdminUserName: config.azGroups.aks,

vaultInfo: {

vaultName: sharedStack.vault.name,

resourceGroupName: sharedStack.rsGroup.name,

},

logWorkspaceId: sharedStack.logWorkspace.id,

tier: 'Free',

vmSize: 'Standard_B2ms',

acr,

vnet,

});

// Export the information that will be used in the other projects

export default {

rsGroup: { name: rsGroup.name, id: rsGroup.id },

arc: { name: acr.name, id: acr.id },

aksVnet: { name: vnet.name, id: vnet.id },

aks: { name: aks.name, id: aks.id },

};

Deployment and Cleanup

Deploying the Stack

To deploy the stack, execute the pnpm run up command. This provisions the necessary Azure resources. We can verify the deployment as follows:

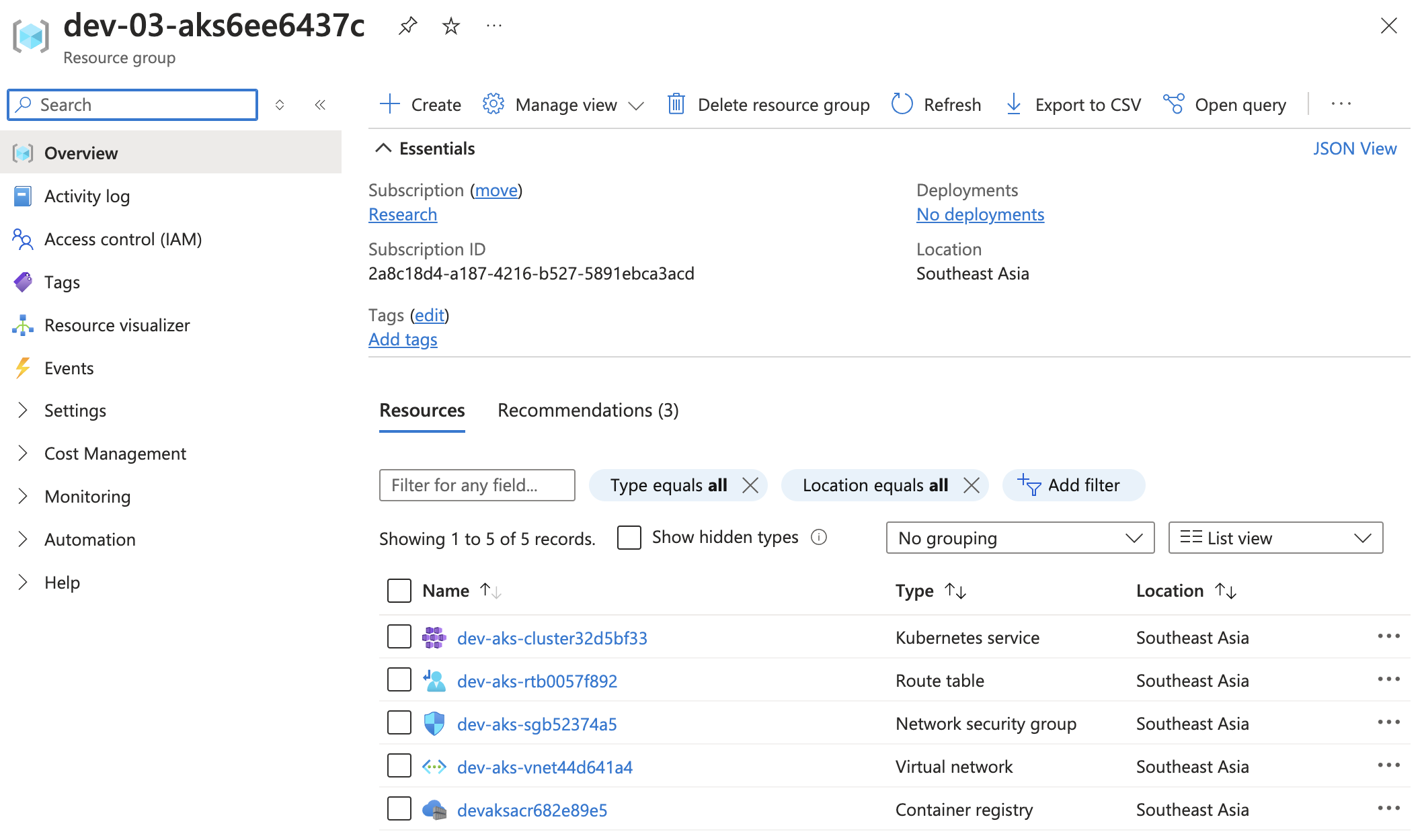

- Successfully deployed Azure resources:

Overview of successfully deployed Azure resources.

Overview of successfully deployed Azure resources.

Cleaning Up the Stack

To remove the stack and clean up all associated Azure resources, run the pnpm run destroy command. This ensures that any resources no longer needed are properly deleted.

Conclusion

In this tutorial, we’ve successfully implemented a private AKS cluster with advanced networking features using Pulumi. By setting up a private Container Registry, configuring firewall rules, and integrating the cluster with a Hub VNet, we have enhanced the security and manageability of our Kubernetes environment. These steps ensure that the AKS cluster is well-secured and capable of meeting the demands of a production-grade infrastructure.

References

- Outbound network and FQDN rules for AKS clusters

- Dynamic resource providers

- Use EntraID role-based access control for AKS

- Use a service principal with AKS

- Best Practices for Private AKS Clusters

Next

Day 06: Implements a private CloudPC and DevOps Agent Hub with Pulumi

In the next tutorial, it will guide us through setting up a secure CloudPC and DevOps agent hub, aimed at improving the management and operational capabilities of your private AKS environment using Pulumi.

Thank You

Thank you for taking the time to read this guide! I hope it has been helpful, feel free to explore further, and happy coding! 🌟✨

Steven | GitHub